It’s day one of F8, Facebook’s annual developer conference. It’s hard to imagine that, for most of us, the platform is barely 10 years old; it was opened to anyone who had a valid email address in September 2006. But, even beyond the realities of a digital and social world, in which we collectively create and share millions of pieces of content daily, we are now living in an age in which artificial intelligence and virtual reality are real, and are becoming even more embedded in our everyday lives.

It’s day one of F8, Facebook’s annual developer conference. It’s hard to imagine that, for most of us, the platform is barely 10 years old; it was opened to anyone who had a valid email address in September 2006. But, even beyond the realities of a digital and social world, in which we collectively create and share millions of pieces of content daily, we are now living in an age in which artificial intelligence and virtual reality are real, and are becoming even more embedded in our everyday lives.

“Today we’re going to do something different. We’re going to walk through our roadmap for the next 10 years,” says Mark Zuckerberg, wearing his trademark gray tee shirt and jeans, from a stage in Fort Mason in San Francisco, California. “Before we get into detail, I want to talk about our mission, and why the work we’re doing together is more important than it’s ever been. Facebook’s mission is to connect the world, and the Internet has enabled us to access and share more information and ideas than ever before.”

Lofty thoughts, but then he does something unexpected. For the first time I can remember, at least in this type of venue, Zuckerberg ventures into the political. He alludes to politicians who threaten to build walls, and who incite fear of others. “It takes courage to choose hope over fear,” he says. “It’s this hope and this optimism that is behind every important step forward…Instead of building walls, we can help build bridges. And instead of dividing people, we can bring people together, one connection at a time.”

What’s clear is this: Zuckerberg is no longer the callow kid who built a platform most famous for enabling people to poke and throw sheep at each other. He’s matured. He’s evolved from an awkward, brilliant kid to confident, articulate and passionate leader.

But what’s also clear is that Zuckerberg’s ambitions, and the ambitions of Facebook, stretch far beyond what anyone could initially have imagined. Today Facebook is a platform that encompasses a family of apps–WhatsApp, Messenger, Facebook, Instagram and Groups–that serve the communication needs of billions of people around the world.

Even as comparatively late as four years ago, right as the company completed its initial public offering, analysts (such as me) were worried about Facebook’s ability to move beyond the desktop to develop a meaningful mobile strategy. Now the company is focused on three fronts simultaneously: connectivity (perhaps most clearly encapsulated by its “Free Basics” offer, which has been amply documented), virtual reality (what Zuckerberg calls “the next mobile platform”) and artificial intelligence, which underlies pretty much every significant offering Facebook has or will hope to have in the next several years.

It’s a lot to assimilate.

It’s tempting to think of these three objectives as separate entities, but the truth is that each reinforces each other in some way. Connectivity of course is the infrastructure that enables digital tools to reach people at scale. Artificial Intelligence is at a critical juncture, because (at least in my opinion) it is still something many people think of as science fiction (films like Her, Ex Machina,Black Mirror come to mind) rather than part of our daily reality.

But if you use Facebook, you already interact with AI on a daily basis. The “Moments” app includes facial recognition based on AI, Messenger uses AI to filter spam (sometimes a bit too well, as recent news stories will attest), Newsfeed uses AI to curate your newsfeed and Accessibility uses AI to interpret photos for the blind. So AI is already here, already so much a part of the way we communicate now and in the future. Not for everything, but it underpins as much of what you see as how you interact.

And AI is the foundation of perhaps the most talked-about piece of news released today: Messenger Platform, which enables developers to build chat bots that can interact with people to do things as seemingly simple as request information or order products or handle customer service issues. The idea is that, as a user, the more you use it, the more it is able to tailor its recommendations to you. For Facebook, the more examples they have of how it’s used, the more they’re able to refine the AI to be faster, easier, more error-free, more naturalistic.

It’s appealing for many of us to hear from Zuckerberg that “Now, in order to order flowers from 1-800-FLOWERS, you never have to call 1-800-Flowers again.” This kind of interaction, pretty simple on its face, can also include a lot of complexity, and it’s a great use of AI as it does not necessarily require that one speak with a real person. This is particularly interesting for brands that want to scale customer conversations across digital channels, either for service, sales or other purposes, but of course its usefulness depends on efficient, intuitive, creepiness-free interactions.

Virtual reality is the piece that, beyond the early adopters, still feels remote to a lot of people. If you’re not a dedicated gamer, what use do you have for it? Is it like Google Glass, which seemed cool (or not) at the time? Will the price point and user experience and available content ever make it accessible enough for everyone? Judging by the number of companies I hear who have spun up VR “innovation centers” I wouldn’t bet against it, at least on the content front.

In Zuckerberg’s words, “VR has the potential to be the most social platform.” That’s interesting when we think about how quickly technology moves and how clunky it always is in its first incarnation. In the future, strapping on a heavy piece of plastic will look quaint (it does already), and we’ll interact with VR in ways that fit more naturally into our daily lives, whether with our phones, or with glasses, or with new devices or immersive screens we can barely imagine today.

My two takeaways so far this morning are this: 1) the first phase of digital is behind us; ten years ago we had one app—Facebook—that did pretty much one thing. Today there’s a family of apps, and an acknowledgement that we each want and need different types of connectivity for different situations. This brings extraordinary opportunities and extraordinary complexity.

But what’s also clear is that 2) this next phase of Facebook is explicitly about opening APIs, building ecosystems and fostering development. The cynical view is that this demonstrates Zuckerberg’s thirst for world domination, while the optimist hopes for a bit of what he alluded to at the top of his keynote: less wall-building, more bridge-building.

As always, I appreciate your comments.

—–

Here for reference is a complete list of the announcements made today (below content and links courtesy of Facebook):

-

- Live API: Facebook Live has been incredibly successful, and now we’re opening our API so developers can design even more ways for people and publishers to interact and share in real time on Facebook.

- Bots for Messenger: As part of the new Messenger Platform, bots can provide anything from automated subscription content like weather and traffic updates, to customized communications like receipts, shipping notifications, and live automated messages — all by interacting directly with the people who want to get them. The Messenger Send / Receive API will support not only sending and receiving text, but also images and interactive rich bubbles containing multiple calls-to-action.

- Facebook 360: We’ve designed and built a 3D-360 camera system,Facebook Surround 360, which produces sharp, truly spherical footage in 3D. The system includes stitching technology that seamlessly marries the video from 17 cameras, vastly reducing post-production effort and time. The design specs and stitching code will be available on GitHub this summer.

- Profile Expression Kit: People can now use third-party apps to create fun and personality-infused profile videos with just a few taps. The closed beta kicks off today with support for six apps: Boomerang by Instagram, Lollicam, BeautyPlus, Cinemagraph Pro by Flixel, MSQRD and Vine.

- Free Basics Simulator & Demographic Insights: It’s now easier for developers to build for Free Basics with the Free Basics Simulator, which lets them see how their service will appear in the product, and Demographic Insights, which helps them better understand the types of people using their services.

- Account Kit: Account Kit gives people the choice to log into new apps with just their phone number or email address, helping developers grow their apps to new audiences.

- Facebook Analytics for Apps updates: More than 450,000 unique apps already use this product to understand, reach, and expand their audiences, and we’re introducing more features to help developers and marketers grow their businesses with deeper audience insights, and push and in-app notifications (beta).

- Quote Sharing: Quote Sharing is new way for people to easily share quotes they find around the web or in apps with their Facebook friends.

- Save Button: The Save Button lets people save interesting articles, products, videos, and more from around the web into their Saved folder on Facebook, where they can easily access it later from any device.

- Rights Manager: Rights Manager is a new tool that helps publishers manage and protect video on Facebook at scale, while also giving them increased flexibility and control for the use of their videos.

- Crossposted Videos and Total Performance Insights: The update makes it easier for publishers to reuse and monitor their videos across different posts and Pages, and to see the total performance of the video across Facebook.

- Instant Articles: The Instant Articles program is now open to all publishers—of any type, anywhere in the world.

On Sunday, I had the opportunity to discuss my latest research on image intelligence at TEDxBerlin; today I spoke about it at the IFA+ Summit. It’s inspiring to look at technology through two separate lenses: one that is aspirational and shows us a little about what the future may bring, and one that is more practical and focused on real-world implications.

On Sunday, I had the opportunity to discuss my latest research on image intelligence at TEDxBerlin; today I spoke about it at the IFA+ Summit. It’s inspiring to look at technology through two separate lenses: one that is aspirational and shows us a little about what the future may bring, and one that is more practical and focused on real-world implications.

Last week I had the pleasure of being interviewed at

Last week I had the pleasure of being interviewed at  It’s day one of F8, Facebook’s annual developer conference. It’s hard to imagine that, for most of us, the platform is barely 10 years old; it was opened to anyone who had a valid email address in September 2006. But, even beyond the realities of a digital and social world, in which we collectively create and share millions of pieces of content daily, we are now living in an age in which artificial intelligence and virtual reality are real, and are becoming even more embedded in our everyday lives.

It’s day one of F8, Facebook’s annual developer conference. It’s hard to imagine that, for most of us, the platform is barely 10 years old; it was opened to anyone who had a valid email address in September 2006. But, even beyond the realities of a digital and social world, in which we collectively create and share millions of pieces of content daily, we are now living in an age in which artificial intelligence and virtual reality are real, and are becoming even more embedded in our everyday lives.![The Data-Driven Business_FINAL[3]](https://susanetlinger.files.wordpress.com/2016/02/the-data-driven-business_final3.jpg?w=232&h=300) As we are continually reminded, data is everywhere, but what are organizations actually doing with it?

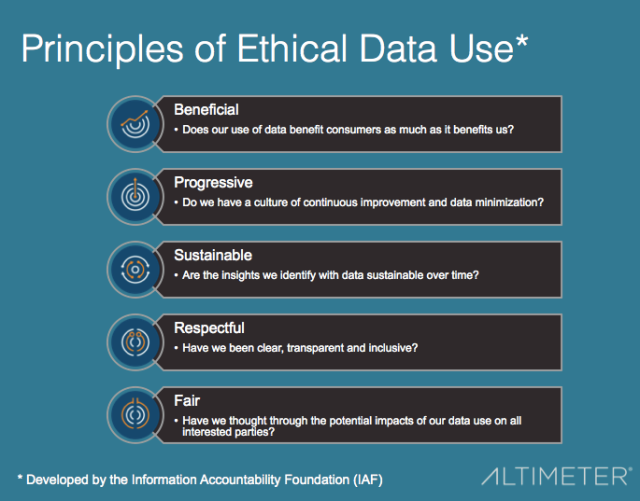

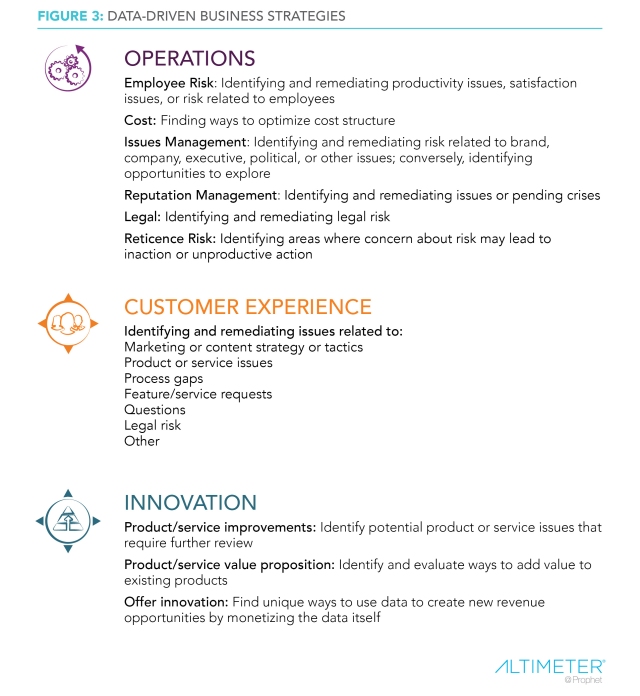

As we are continually reminded, data is everywhere, but what are organizations actually doing with it? The report is intended for business people who are interested in learning more about the impact of data proliferation and how it may be used to drive competitive advantage. It lays out the key use cases for data-driven value creation, presents examples of organizations that are using data creatively and effectively, outlines the most pressing issues and opportunities, and presents best practices that show how data strategy can transform organizations.

The report is intended for business people who are interested in learning more about the impact of data proliferation and how it may be used to drive competitive advantage. It lays out the key use cases for data-driven value creation, presents examples of organizations that are using data creatively and effectively, outlines the most pressing issues and opportunities, and presents best practices that show how data strategy can transform organizations. A few weeks ago, my Altimeter colleagues and I published our

A few weeks ago, my Altimeter colleagues and I published our